Benchmark 5.0: Qwen3 and ChatGPT o4 Mini Join the League

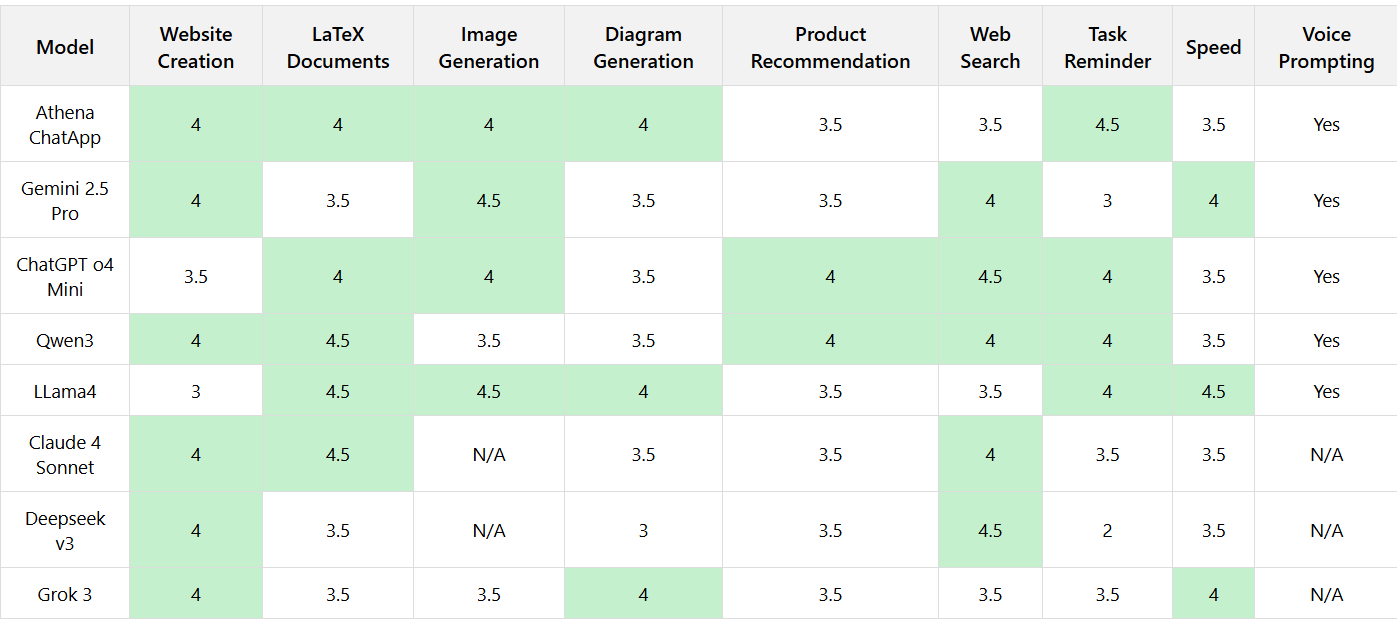

It’s benchmark season again, and we’re back with a fresh update—this time featuring two compelling new additions: Qwen3 and ChatGPT o4 Mini. As the AI landscape rapidly evolves, these entrants bring enhanced capabilities that are pushing the boundaries of what assistants can do. We’ve used practical, real-world criteria—such as website creation, LaTeX document generation, diagram support, product recommendations, and task reminders—to assess how well today’s top models truly perform.

Let’s begin with Qwen3, a model that seamlessly blends speed and intelligence. Known for its hybrid reasoning capabilities and robust multilingual support, Qwen3 operates in two distinct modes: Non-Thinking Mode for rapid, straightforward responses, and Thinking Mode for deeper, logic-intensive tasks like solving math problems, generating complex code, and unraveling intricate queries. It delivers standout performance in LaTeX documents, website development, and task reminders—all scoring good. In particular, its ability to create LaTeX-based academic content such as quizzes and technical formats and website creation stands out as one of the best in class. While its speed score is slightly tempered by longer response times during website creation, its efficiency across other tasks remains highly competitive.

Next, we examine ChatGPT o4 Mini—an efficient, compact powerhouse designed for peak performance. It impresses with lightning-fast reasoning in math, data analysis, and programming. More impressively, it handles images—whiteboard sketches, diagrams, and more—with a level of visual understanding uncommon in smaller models. One of its major advantages is cost-efficiency; significantly cheaper than its GPT-4o counterparts, o4 Mini is perfect for high-volume use cases. It posts high scores in Web search, LaTeX, diagram generation, and product recommendations. While its speed rating dips due to slower image generation, its overall performance remains consistent and sharp.

Looking at the broader picture, each model plays to its strengths. Gemini 2.5 Pro remains a top-tier pick for image generation and rapid research tasks. LLaMA4 scores well in technical visuals and reasoning. Claude 4 Sonnet offers excellent support for academic and professional writing. Deepseek v3 maintains its edge in web search despite weaker task reminder capabilities. Grok 3, on the other hand, delivers balanced, dependable performance across the board. The entry of Qwen3 and o4 Mini adds valuable diversity to the field, making the competition tighter and innovation even more exciting.

And then we have Athena—the benchmark’s standout performer with impressive and consistent scores. Scoring 4s and above across nearly all categories, Athena remains the only model to offer exceptional strength in creativity, productivity, and task management—complete with voice prompting. Whether you’re building a website, solving technical problems, managing your day-to-day, or even planning your next vacation, Athena handles it all with ease. With its added edge as a smart travel recommender, Athena shows it’s not just well-rounded—it’s ahead of the curve. It’s not just another tool—it’s your all-in-one AI companion, setting a new bar for versatility, intelligence, and user experience.

Join the Athena Community!

💬 Discord: Join the conversation

📺 YouTube: Watch Athena in action

📸 Instagram: Follow for updates

🎶 TikTok: Check out AI-powered tricks

💼 LinkedIn: Connect professionally