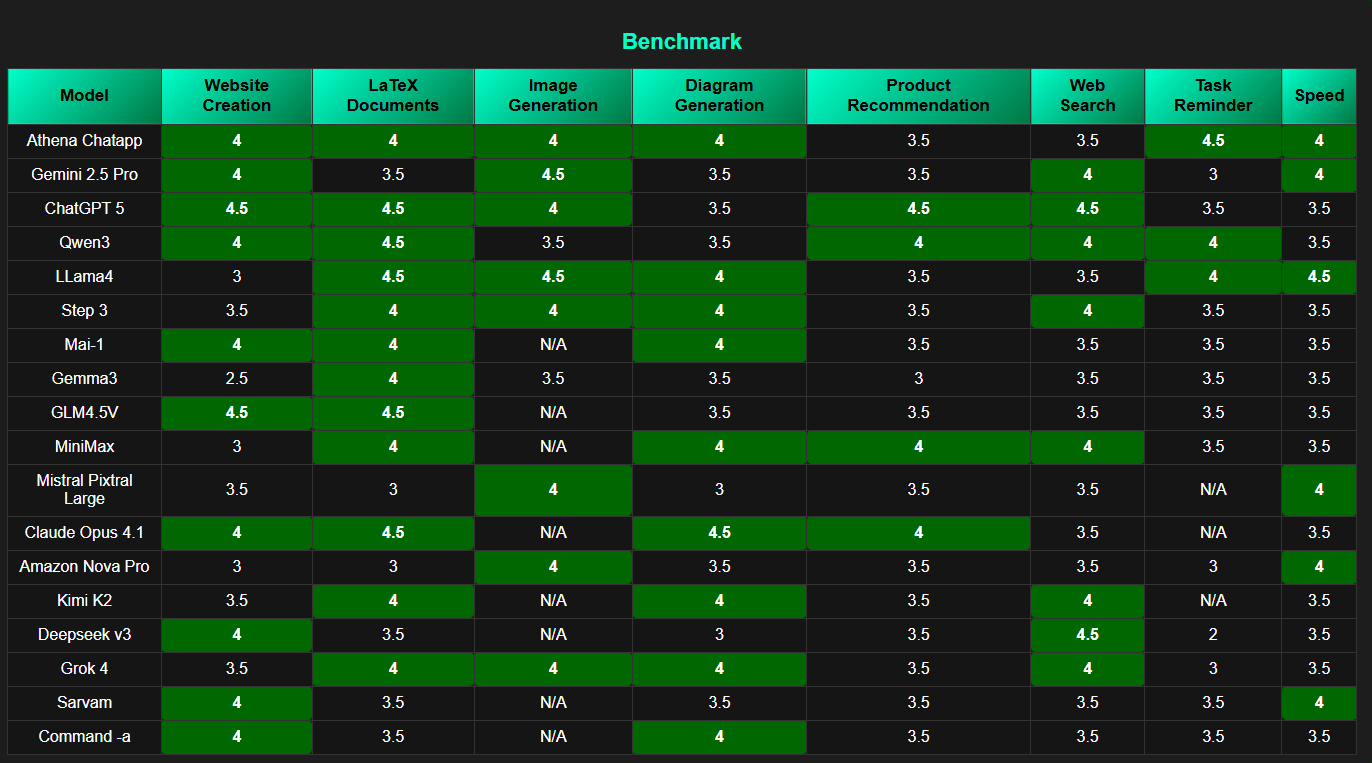

Measuring Excellence Across the Benchmarks.

Every few months, the AI world feels like a competition arena. New models enter, each claiming to outshine the rest. Some excel in speed, some in creativity, and others in reliability. But which ones actually live up to the hype? To answer that, we turn to benchmarking. This round is special—we’re not only comparing well-known giants like ChatGPT, Gemini, and Claude, but also welcoming two fresh benchmarks: Command-A by Cohere and Sarvam AI. By putting all of them to the test across website building, LaTeX drafting, visual creation, recommendations, web search, reminders, and speed, we get a clear picture of their strengths and weaknesses. It’s not just about scores; it’s about discovering which AI tools can truly make a difference in everyday life.

Command A, a new state-of-the-art generative model by Cohere, is built for enterprises that demand fast, secure, and high-quality AI. Optimized to deliver maximum performance with minimal hardware, it can run on just two GPUs, making it both powerful and efficient. The model shines in diagram generation, web development, and LaTeX document creation, proving its strength in handling technical and structured outputs. However, it currently lacks image generation capabilities and shows room for growth in product recommendations. Its speed is a clear advantage, giving businesses reliable performance without compromise.

Sarvam-M A 24-billion parameter model fine-tuned on Indic data, is designed to push the boundaries of AI performance in Indian languages, reasoning, and coding. One of its standout strengths is web development, where it shows strong adaptability in building modern and functional sites. It also performs well in web search, with impressive speed that makes it reliable for quick queries. However, diagram generation is still an area where the model has room to grow. Its image generation capability is currently absent, and performance in LaTeX document drafting could also see improvement. Overall, Sarvam AI is a promising addition, balancing strengths with clear opportunities for refinement.

When we look across all models, clear leaders emerge with standout scores of 4.5, such as ChatGPT 5, Qwen3, LLama4, and GLM4.5V, excelling in areas like LaTeX documents, website creation, and speed. Models with consistent 4s, including Athena Chatapp, Claude Opus 4.1, Sarvam, and Command A, show balanced reliability across tasks, proving themselves dependable all-rounders. On the other hand, models scoring 3 or 2.5, like Gemma3, Amazon Nova Pro, and MiniMax, still have significant room to grow, particularly in website creation and LaTeX tasks. When it comes to image generation, strong performers include Gemini 2.5 Pro, ChatGPT 5, LLama4, Mistral Pixtral Large, and MiniMax, while others like Sarvam and Command A currently lack this feature. Some models also shine in diagram generation—with Claude Opus 4.1, LLama4, and Command A standing out. It’s clear the landscape is diverse, with some models pushing boundaries while others need to strengthen their weaker areas. This mix highlights how benchmarking gives us a true picture of where each model excels and where it falls short.

Athena isn’t just another AI model — it’s the culmination of what the best models offer, refined into one seamless experience. Whether it’s handling complex documents, generating creative content, or providing intelligent recommendations, Athena does it all with unmatched precision and speed. It’s like having a versatile expert by your side, ready to adapt to any task without compromise. No need to juggle multiple tools or settle for one specialty; Athena combines reliability, creativity, and intelligence into a single, effortless solution. Choosing Athena is choosing an AI that truly works for you, elevating productivity and innovation beyond the ordinary.

Join the Athena Community!

💬 Discord: Join the conversation

📺 YouTube: Watch Athena in action

📸 Instagram: Follow for updates

🎶 TikTok: Check out AI-powered tricks

💼 LinkedIn: Connect professionally